A walk-through of finetuning a LLM

The introduction of open-weights LLMs like Meta’s Llama 3.2 series and Microsoft’s Phi series of models has brought accessibility to near-frontier model performance within reach of the independent AI researcher, student or the curious hobbyist. These models with the ecosystem that has spawned around it like Google’s Colaboratory to work on these models and the HuggingFace platform to share datasets and models have greatly leveled the playing field for the committed enthusiast.

This post discusses how to efficiently finetune a LLM on a dataset using techniques such as quantization and LoRA. We utilize Colaboratory as a platform to train a model using the available accelerators and HuggingFace’s API to access datasets for model training and as a suitable platform to publish and share the model. The full code is provided on GitHub for adapting to other LLMs and datasets as desired.

Task

The use-case for the walkthrough is supervised finetuning (SFT) of the Llama 3.2 (3B) model on a dataset of Malayalam language question answer pairs.

The model used is Meta’s Llama 3.2 3B instruction tuned model [1]. The dataset of Malayalam question answer pairs is prepared from ai4bharat’s Indic language Question Answering dataset [2]. The code takes advantage of HuggingFace’s Transformers library to configure, train and publish the model and the `peft` library for efficient finetuning of the model using Low Rank Adaptation (LoRA) [3].

Dataset preprocessing

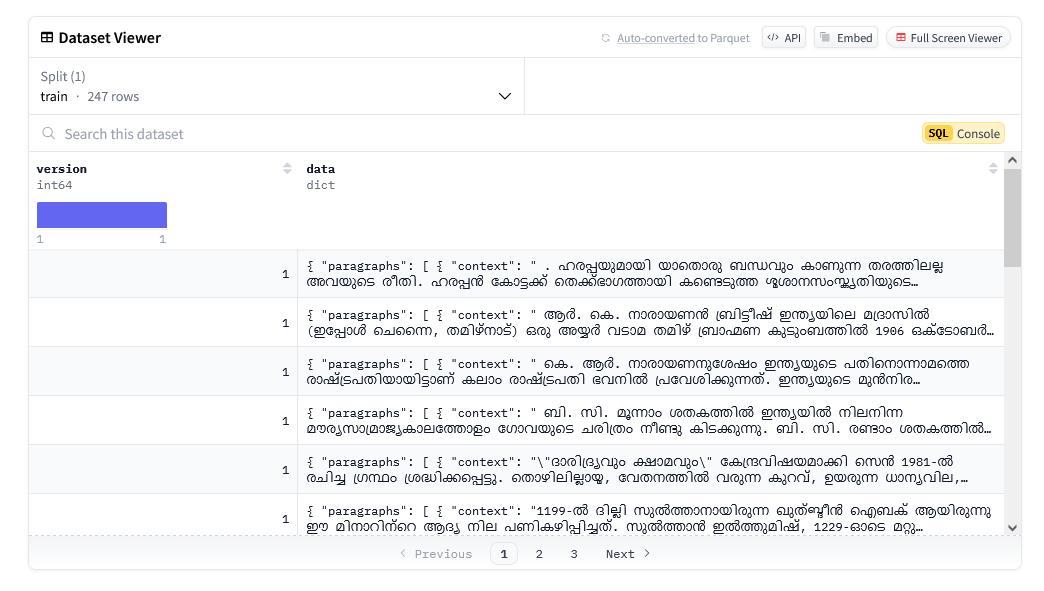

The dataset is available as a JSON file. Each object of the file consists of a context – a short passage on a topic followed by a series of questions related to the passage and their attendant answers.

We see that the dataset consists of two columns – a `version` column and a `data` column where the `data` column contains the context and the question-answer pairs. We would like to feed a context, followed by a question and its attendant answer to the LLM. Accordingly we apply the following preprocessing step to each object of the original dataset.

def process_data_sample(example):

processed_example = ()

context = example["data"]["paragraphs"][0]["context"]

for j in range(len(example["data"]["paragraphs"][0]["qas"])):

question = example["data"]["paragraphs"][0]["qas"][j]["question"]

answer = example["data"]["paragraphs"][0]["qas"][j]["answers"][0]["text"]

# Prepare the processed example for a History Question Answering System

processed_example = processed_example + (

f"{context}\n\n"

f"ഉപയോക്തൃ ചോദ്യം:\n{question}\n\n"

f"ഉത്തരം:\n{answer}\n\n",

)

processed_example = "".join(processed_example)

return processed_example To each example context-question-answer we add "ഉപയോക്തൃ ചോദ്യം" (question) to the beginning of the question and “ഉത്തരം” (answer) to the beginning of the answer.

Efficient training techniques

Now that we have the dataset ready, we setup the configuration for the model – Meta’s Llama 3.2 3B paramter instruct model.

We use the Quantization followed by LoRA (QloRA) method to finetune our model. 4-bit quantization is used to compress the LLM.

from transformers import BitsAndBytesConfig

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16,

)Low Rank Adaptation (LoRA) is used to further prepare the model for efficient finetuning. Here we apply LoRA to both the attention layers and the MLP layers.

from peft import LoraConfig

peft_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.1,

bias='none',

task_type="CAUSAL_LM",

target_modules=["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

)We use the `SFTTrainer` class from the `trl` library for our training.

Publishing of the trained model:

Finally, we upload the model to the HuggingFace hub so that it can be shared easily.

trainer.push_to_hub(commit_message = "end of finetuning", model_name = "llama-3.2-3b-sft-indicqa-ml-v0.1")References:

[1] Meta, Llama 3.2 (3B) Instruct, https://huggingface.co/meta-llama/Llama-3.2-3B-Instruct, September 2024, Last accessed: October 2024

[2] ai4bharat, ai4bharat-indicqa-ml-202410, https://huggingface.co/datasets/sepiatone/ai4bharat-indicqa-ml-202410, October 2024, Last accessed: October 2024

[2] HuggingFace, peft, https://huggingface.co/docs/peft/index, 2022, Last Accessed: October 2024